AUTO ENCODER

In this notebook you will find how auto encoder are trained in tensorflow(Keras). This notebook have two different type of encoder i.e normal * Auto Encoder * and * Denoising Auto Encoder * .

Normal Encoder

LIBRARY ARE LOADED

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

%matplotlib inline

%config InlineBackend.figure_format = 'retina'

import matplotlib.pyplot as plt

import pandas as pd

import numpy as np

import seaborn as sns

import warnings

warnings.filterwarnings('ignore')

#from __future__ import print_function

from keras.models import Model

from keras.layers import Dense, Input

from keras.datasets import mnist

from keras.regularizers import l1

from keras.optimizers import Adam

|

FOR PLOTING OUTPUTS

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

def plot_autoencoder_outputs(autoencoder, n, dims):

decoded_imgs = autoencoder.predict(x_test)

# number of example digits to show

n = 5

plt.figure(figsize=(10, 4.5))

for i in range(n):

# plot original image

ax = plt.subplot(2, n, i + 1)

plt.imshow(x_test[i].reshape(*dims))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

if i == n/2:

ax.set_title('Original Images')

# plot reconstruction

ax = plt.subplot(2, n, i + 1 + n)

plt.imshow(decoded_imgs[i].reshape(*dims))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

if i == n/2:

ax.set_title('Reconstructed Images')

plt.show()

|

TRAIN TEST SPLITS

1

2

3

4

|

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.astype('float32') / 255.0

x_test = x_test.astype('float32') / 255.0

|

RESIZED FOR PROCESSING

1

2

3

4

5

|

x_train = x_train.reshape((len(x_train), np.prod(x_train.shape[1:])))

x_test = x_test.reshape((len(x_test), np.prod(x_test.shape[1:])))

print(x_train.shape)

print(x_test.shape)

|

(60000, 784)

(10000, 784)

MODEL CREATION

1

2

3

4

5

6

7

8

9

10

11

12

13

|

input_size = 784

hidden_size = 128

code_size = 32

input_img = Input(shape=(input_size,))

hidden_1 = Dense(hidden_size, activation='relu')(input_img)

code = Dense(code_size, activation='relu')(hidden_1)

hidden_2 = Dense(hidden_size, activation='relu')(code)

output_img = Dense(input_size, activation='sigmoid')(hidden_2)

autoencoder = Model(input_img, output_img)

autoencoder.compile(optimizer='adam', loss='binary_crossentropy')

autoencoder.fit(x_train, x_train, epochs=3)

|

Epoch 1/3

1875/1875 [==============================] - 29s 2ms/step - loss: 0.1880

Epoch 2/3

1875/1875 [==============================] - 5s 3ms/step - loss: 0.1003

Epoch 3/3

1875/1875 [==============================] - 4s 2ms/step - loss: 0.0950

<keras.callbacks.History at 0x7fc58b7709d0>

MODEL SUMMARY(ARC.)

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 784)] 0

_________________________________________________________________

dense (Dense) (None, 128) 100480

_________________________________________________________________

dense_1 (Dense) (None, 32) 4128

_________________________________________________________________

dense_2 (Dense) (None, 128) 4224

_________________________________________________________________

dense_3 (Dense) (None, 784) 101136

=================================================================

Total params: 209,968

Trainable params: 209,968

Non-trainable params: 0

_________________________________________________________________

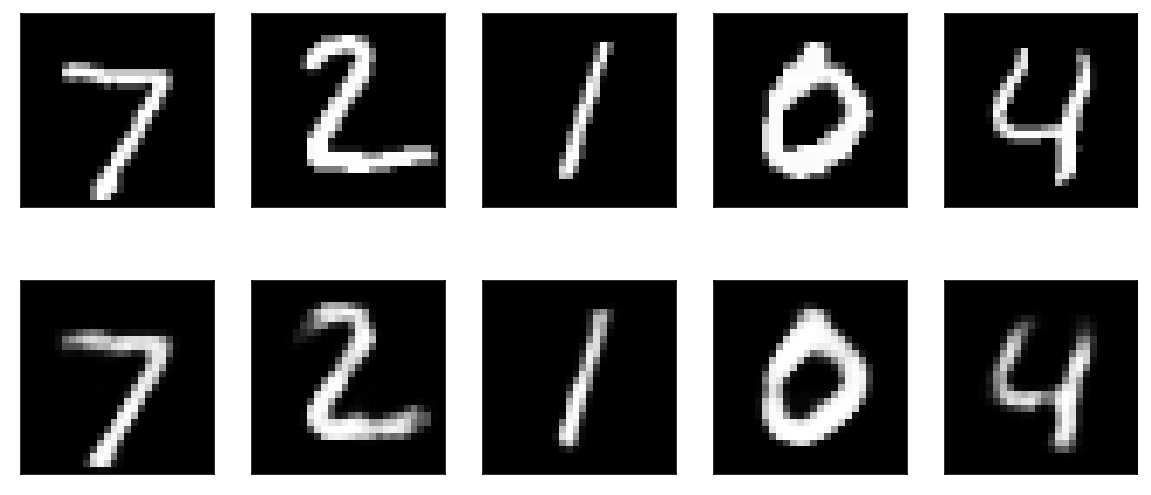

MODEL OUTPUT

1

|

plot_autoencoder_outputs(autoencoder, 5, (28, 28))

|

MODEL TEST

1

2

3

4

5

6

|

l=np.zeros((28,12))

m=np.ones((28,4))*0.8

r=np.zeros((28,12))

I=np.concatenate((l,m,r),axis=1).reshape(1,784)

plt.imshow(I.reshape(28,28))

L= autoencoder.predict(I)

|

1

2

3

|

print(L.shape)

plt.figure(figsize=(8, 8))

plt.imshow(L.reshape(28,28))

|

<matplotlib.image.AxesImage at 0x7fc57026c700>

MODEL WEIGHTS VISUALIZATION

1

2

3

4

5

6

7

8

9

10

|

weights = autoencoder.get_weights()[0].T

n = 10

plt.figure(figsize=(20, 5))

for i in range(n):

ax = plt.subplot(1, n, i + 1)

plt.imshow(weights[i+0].reshape(28, 28))

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

|

DENOISING AUTO ENCODER

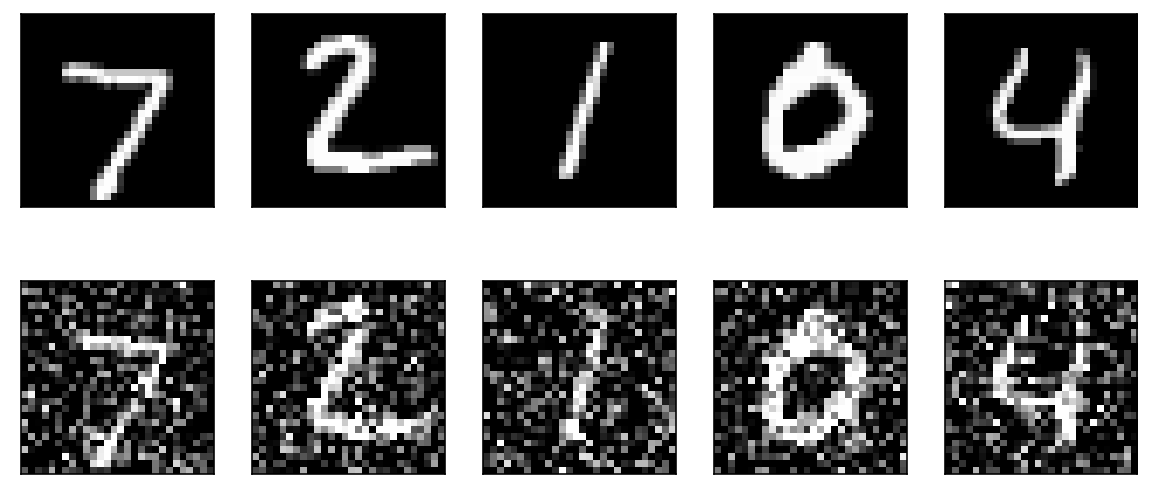

DATA CREATION

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

noise_factor = 0.4

x_train_noisy = x_train + noise_factor * np.random.normal(size=x_train.shape)

x_test_noisy = x_test + noise_factor * np.random.normal(size=x_test.shape)

x_train_noisy = np.clip(x_train_noisy, 0.0, 1.0)

x_test_noisy = np.clip(x_test_noisy, 0.0, 1.0)

n = 5

plt.figure(figsize=(10, 4.5))

for i in range(n):

# plot original image

ax = plt.subplot(2, n, i + 1)

plt.imshow(x_test[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

if i == n/2:

ax.set_title('Original Images')

# plot noisy image

ax = plt.subplot(2, n, i + 1 + n)

plt.imshow(x_test_noisy[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

if i == n/2:

ax.set_title('Noisy Input')

|

MODEL

1

2

3

4

5

6

7

8

9

10

11

12

13

|

input_size = 784

hidden_size = 128

code_size = 32

input_img = Input(shape=(input_size,))

hidden_1 = Dense(hidden_size, activation='relu')(input_img)

code = Dense(code_size, activation='relu')(hidden_1)

hidden_2 = Dense(hidden_size, activation='relu')(code)

output_img = Dense(input_size, activation='sigmoid')(hidden_2)

autoencoder = Model(input_img, output_img)

autoencoder.compile(optimizer='adam', loss='binary_crossentropy')

autoencoder.fit(x_train_noisy, x_train, epochs=10)

|

Epoch 1/10

1875/1875 [==============================] - 7s 2ms/step - loss: 0.2093

Epoch 2/10

1875/1875 [==============================] - 3s 2ms/step - loss: 0.1309

Epoch 3/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.1219

Epoch 4/10

1875/1875 [==============================] - 3s 2ms/step - loss: 0.1181

Epoch 5/10

1875/1875 [==============================] - 3s 2ms/step - loss: 0.1161

Epoch 6/10

1875/1875 [==============================] - 5s 2ms/step - loss: 0.1143

Epoch 7/10

1875/1875 [==============================] - 5s 2ms/step - loss: 0.1132

Epoch 8/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.1124

Epoch 9/10

1875/1875 [==============================] - 3s 2ms/step - loss: 0.1115

Epoch 10/10

1875/1875 [==============================] - 4s 2ms/step - loss: 0.1113

<keras.callbacks.History at 0x7fc570466790>

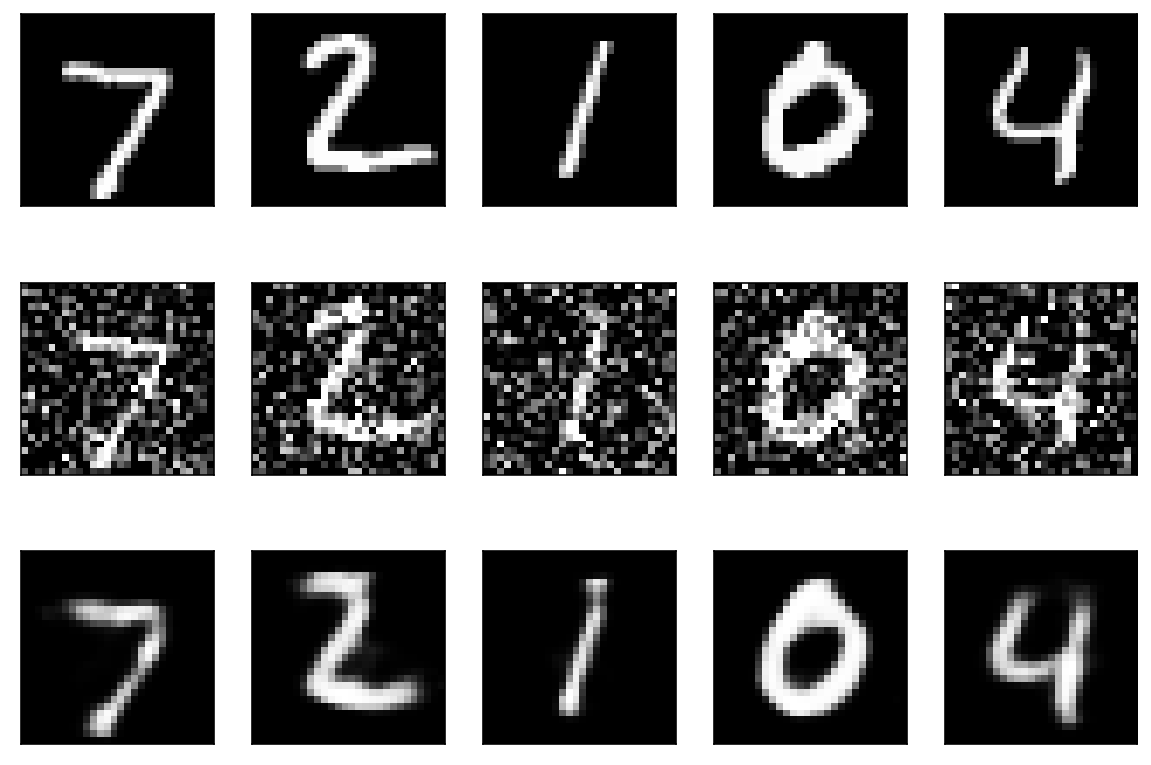

PLOTING DATA

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

n = 5

plt.figure(figsize=(10, 7))

images = autoencoder.predict(x_test_noisy)

for i in range(n):

# plot original image

ax = plt.subplot(3, n, i + 1)

plt.imshow(x_test[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

if i == n/2:

ax.set_title('Original Images')

# plot noisy image

ax = plt.subplot(3, n, i + 1 + n)

plt.imshow(x_test_noisy[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

if i == n/2:

ax.set_title('Noisy Input')

# plot noisy image

ax = plt.subplot(3, n, i + 1 + 2*n)

plt.imshow(images[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

if i == n/2:

ax.set_title('Autoencoder Output')

|

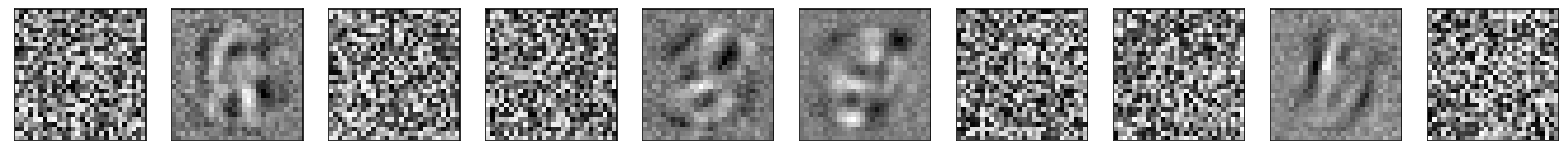

WEIGHT VISUALIZATION

1

2

3

4

5

6

7

8

9

10

|

weights = autoencoder.get_weights()[0].T

n = 10

plt.figure(figsize=(20, 5))

for i in range(n):

ax = plt.subplot(1, n, i + 1)

plt.imshow(weights[i+0].reshape(28, 28))

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

|

THANKS